Artificial intelligence (AI) provides significantly revolutionized different industries, including computer software development. One involving the most promising advancements in this particular area is AI-driven code generation. Equipment like GitHub Copilot, OpenAI’s Codex, and others have shown remarkable capabilities within assisting developers by generating code snippets, automating routine jobs, and even offering complete methods to complex issues. However, AI-generated computer code is not immune to errors, and knowing how to anticipate, identify, and fix these errors is essential. This process will be known as mistake guessing in AJE code generation. This informative article explores the principle of error guessing, its significance, in addition to the best techniques that developers could adopt to assure more reliable in addition to robust AI-generated signal.

Understanding Error Speculating

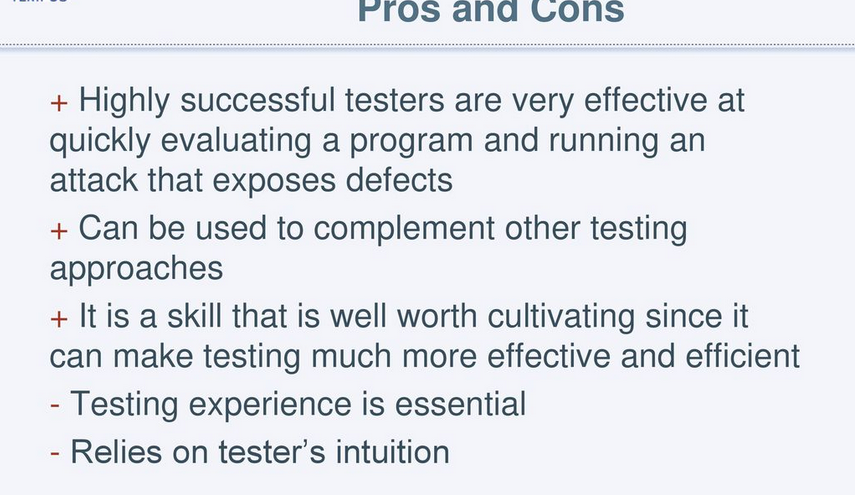

Error guessing is a software testing approach where testers assume the types involving errors that may take place in a method depending on their expertise, knowledge, and instinct. Within the context involving AI code technology, error guessing consists of predicting the potential mistakes that the AI might make any time generating code. These kinds of errors can variety from syntax problems to logical defects and may arise by various factors, including ambiguous prompts, unfinished data, or restrictions within the AI’s training.

Error guessing inside AI code era is vital because, as opposed to traditional software enhancement, where a human designer writes code, AI-generated code is made depending on patterns figured out from vast datasets. This means that the AI might produce signal that seems appropriate at first glance but includes subtle errors that will could bring about considerable issues or even discovered and corrected.

Common Errors in AI-Generated Code

Before delving into techniques and best practices for error guessing, it’s important to know the types of mistakes commonly seen in AI-generated code:

Syntax Mistakes: These are the most straightforward errors, where the generated code does not adhere to typically the syntax rules of the programming language. While modern AI models are skillful at avoiding simple syntax errors, they will still occur, especially in complex signal structures or whenever dealing with less common languages.

Reasonable Errors: These happen when the code, although syntactically correct, truly does not behave as expected. Logical errors can be challenging to spot because the code may run with out issues but create incorrect results.

In-text Misunderstandings: AI models generate code structured on the context provided in typically the prompt. If the particular prompt is ambiguous or lacks adequate detail, the AJE may generate code that doesn’t align with the meant functionality.

Incomplete Program code: Sometimes, AI-generated computer code may be unfinished or require additional human input in order to function correctly. This can lead to be able to runtime errors or even unexpected behavior in case not properly addressed.

More about the author : AI-generated code might unintentionally introduce security vulnerabilities, such as SQL injection risks or perhaps weak encryption procedures, especially if the particular AI model has been not trained together with security best methods at heart.

Techniques regarding Error Guessing inside AI Code Technology

Effective error guessing requires a mixture of experience, critical considering, and a organized way of identifying potential issues in AI-generated code. Here usually are some techniques which will help:

Reviewing Prompts for Clarity: The high quality of the AI-generated code is highly centered on the quality of the suggestions prompt. Vague or even ambiguous prompts may lead to inappropriate or incomplete signal. By carefully researching and refining prompts before submitting these to the AI, programmers can reduce typically the likelihood of mistakes.

Analyzing Edge Cases: AI models will be trained on huge datasets that stand for common coding designs. However, they may have trouble with edge instances or unusual situations. Developers should look at potential edge circumstances and test the generated code against them to recognize any weaknesses.

Cross-Checking AI Output: Evaluating the AI-generated signal with known, trustworthy solutions can help identify discrepancies. This kind of technique is specially valuable when dealing with intricate algorithms or domain-specific logic.

Using Automatic Testing Tools: Combining automated testing resources into the enhancement process can support catch errors inside AI-generated code. Device tests, integration tests, and static analysis tools can quickly determine issues that could be overlooked during handbook review.

Employing Peer Reviews: Having other developers review the particular AI-generated code can provide fresh perspectives and uncover potential problems that might have been missed. Expert reviews invariably is an successful way to leverage collective experience and improve code good quality.

Monitoring AI Design Updates: AI versions are frequently up-to-date with new coaching data and advancements. Developers should keep informed about these kinds of updates, as alterations in the type make a difference the forms of errors it generates. Understanding typically the model’s limitations and strengths can guide error guessing attempts.

Best Practices for Excuse Errors in AJE Code Generation

Within addition to the particular techniques mentioned previously mentioned, developers can follow several best practices to be able to enhance the trustworthiness of AI-generated computer code:

Incremental Code Era: Instead of generating large blocks of code at when, developers can request smaller, incremental snippets. This approach permits more manageable program code reviews and can make it easier in order to spot errors.

Prompt Engineering: Investing period in crafting well-structured and detailed prompts can significantly increase the accuracy of AI-generated code. Prompt architectural involves experimenting with different phrasing and providing explicit recommendations to guide the AJE in the right direction.

Combining AJE with Human Expertise: While AI-generated code can automate many aspects of enhancement, it should certainly not replace human oversight. Developers should incorporate AI capabilities using their expertise to guarantee that the last signal is robust, secure, and meets the project’s requirements.

Documenting Known Issues: Preserving a record involving known issues plus common errors inside AI-generated code can easily help developers assume and address these types of problems in future projects. Documentation is some sort of valuable resource for error guessing plus continuous improvement.

Continuous Learning and Version: As AI versions evolve, so as well should the techniques for error guessing. Programmers should stay up-to-date on advancements inside AI code generation and adapt their very own techniques accordingly. Ongoing learning is crucial to staying in advance of potential problems.

Conclusion

Error speculating in AI signal generation is actually a essential skill for developers working with AI-driven tools. By comprehending the common types associated with errors, employing successful techniques, and sticking to guidelines, builders can significantly lessen the risks related to AI-generated code. Since AI continues in order to play a greater role in computer software development, to be able to anticipate and mitigate mistakes will become more and more important. Through a new combination of AI capabilities and human expertise, developers can control the complete potential involving AI code generation while ensuring the particular quality and reliability of their software program projects.