In the realm involving artificial intelligence (AI) and machine studying, the standard and efficiency of code are pivotal for guaranteeing reliable and effective systems. Synthetic checking has emerged because a crucial way of assessing these features, offering a methodized method of evaluate how well AI types and systems perform under various conditions. This short article delves into synthetic monitoring techniques, highlighting their relevance in AI computer code quality and performance evaluation.

Just what is Synthetic Supervising?

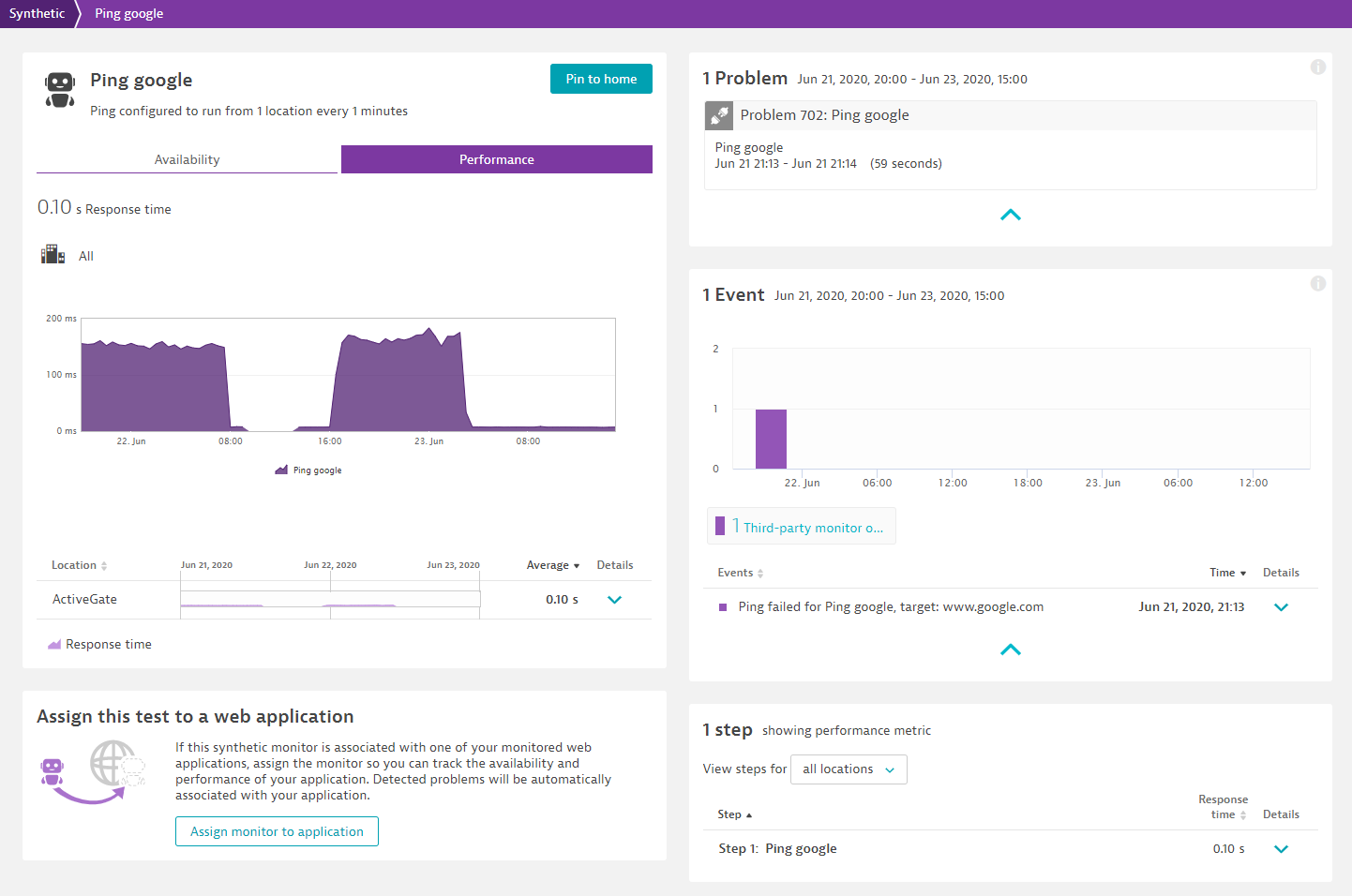

Synthetic monitoring, likewise known as aggressive monitoring, involves simulating user interactions or system activities in order to test and assess the performance associated with an application or support. Unlike real-user supervising, which captures data from actual customer interactions, synthetic monitoring uses predefined intrigue and scenarios to create controlled testing environments. This approach allows for consistent and even repeatable tests, producing it a important tool for considering AI systems.

Importance of Synthetic Checking in AI

Predictive Performance Evaluation: Artificial monitoring enables predictive performance evaluation by simply testing AI designs under various situations before deployment. This proactive approach allows in identifying potential issues and efficiency bottlenecks early throughout the development pattern.

Consistency and Repeatability: AI systems frequently exhibit variability inside performance as a result of dynamic nature of these algorithms. click reference gives a consistent and repeatable way to ensure that you evaluate signal, making certain performance metrics are reliable and comparable.

Early Recognition of Anomalies: By simulating different consumer behaviors and situations, synthetic monitoring can easily uncover anomalies and even potential weaknesses inside AI code that might not have to get evident through traditional screening methods.

Benchmarking plus Performance Metrics: Synthetic monitoring allows intended for benchmarking AI versions against predefined overall performance metrics. This assists in setting performance expectations and assessing different models or perhaps versions to determine which performs better under simulated conditions.

Tips for Synthetic Checking in AI

Scenario-Based Testing: Scenario-based tests involves creating certain use cases or perhaps scenarios that an AI system may well encounter in the real world. By simulating these scenarios, designers can assess exactly how well the AI model performs plus whether it fulfills the desired quality standards. For example, in a organic language processing (NLP) model, scenarios might include various sentence structures, languages, or contexts to analyze the model’s adaptability.

Load Testing: Insert testing evaluates precisely how an AI technique performs under various degrees of load or perhaps stress. This strategy involves simulating differing numbers of contingency users or requests to assess the system’s scalability plus response time. With regard to instance, a suggestion system might be examined with a huge volume of queries to make certain it can easily handle high targeted traffic without degradation in performance.

Performance Benchmarking: Performance benchmarking involves comparing an AI model’s performance in opposition to predefined standards or perhaps other models. This specific technique helps in identifying performance spaces and areas for improvement. Benchmarks may possibly include metrics this kind of as accuracy, response time, and useful resource utilization.

Fault Injections Testing: Fault treatment testing involves purposely introducing faults or even errors to the AI system to gauge its resilience and healing mechanisms. This system helps in assessing exactly how well the program handles unexpected problems or failures, guaranteeing robustness and dependability.

Synthetic Data Technology: Synthetic data technology involves creating artificial datasets that imitate real-world data. This specific technique is particularly helpful when actual information is scarce or perhaps sensitive. By screening AI models in synthetic data, builders can evaluate exactly how well the types generalize to be able to information distributions and situations.

Best Practices with regard to Synthetic Monitoring throughout AI

Define Crystal clear Objectives: Before implementing synthetic monitoring, it’s essential to specify clear objectives and performance criteria. This specific ensures that the monitoring efforts are aligned with typically the desired outcomes in addition to provides a schedule for evaluating the potency of the AI program.

Develop Realistic Situations: For synthetic overseeing to be powerful, the simulated cases should accurately indicate real-world conditions. This particular includes considering different user behaviors, files patterns, and possible edge cases that the AI system may well encounter.

Automate Assessment: Automating synthetic checking processes can substantially improve efficiency and even consistency. Automated checks can be scheduled to run regularly, delivering continuous insights in to the AI system’s performance and top quality.

Monitor and Examine Results: Regularly overseeing and analyzing the results of artificial tests is vital for identifying styles, issues, and regions for improvement. Make use of monitoring tools plus dashboards to picture performance metrics and gain actionable information.

Iterate and Refine: Synthetic monitoring is definitely an iterative method. Based on the particular insights gained by monitoring, refine the particular AI system, revise test scenarios, plus continuously improve the high quality and performance involving the code.

Challenges and Restrictions

Complexity of AI Methods: AI systems are often complex and may exhibit non-linear manners that are challenging to simulate precisely. Making sure synthetic checking scenarios capture the full spectrum of potential behaviors may be difficult.

Useful resource Intensive: Synthetic supervising could be resource-intensive, necessitating significant computational power and time to simulate scenarios plus generate data. Handling resource allocation with monitoring needs is usually essential.

Data Reliability: The accuracy involving synthetic data is critical for effective monitoring. If the man made data does not really accurately represent real-world conditions, the outcomes associated with the monitoring might not be reliable.

Future Guidelines

As AI technology continues to evolve, synthetic monitoring approaches will likely become more sophisticated. Advancements in automation, machine mastering, and data era will enhance the capabilities of synthetic supervising, enabling better and even comprehensive evaluations associated with AI code good quality and performance. In addition, integrating synthetic supervising with real-time analytics and adaptive tests methods will offer deeper insights and even improve the overall robustness of AI systems.

Conclusion

Synthetic monitoring is a new powerful technique intended for evaluating AI computer code quality and functionality. By simulating consumer interactions, load problems, and fault cases, developers can acquire valuable insights in to how well their particular AI models carry out and identify locations for improvement. Regardless of its challenges, artificial monitoring offers a proactive way of ensuring that AI systems meet quality criteria and perform reliably in real-world situations. As AI technology advances, the refinement and integration regarding synthetic monitoring strategies will play some sort of crucial role inside advancing the field plus enhancing the functions of AI devices.