In typically the realm of artificial intelligence (AI), wherever development cycles usually are fast-paced and innovations are constant, customization Continuous Integration and even Continuous Deployment (CI/CD) pipelines is important for ensuring useful, reliable, and international workflows. AI tasks present unique issues because of their complexity plus the requirement for managing large datasets, design training, and application. This article explores best practices and tools for optimizing CI/CD pipelines particularly tailored for AI projects.

Understanding CI/CD in the Context regarding AJE

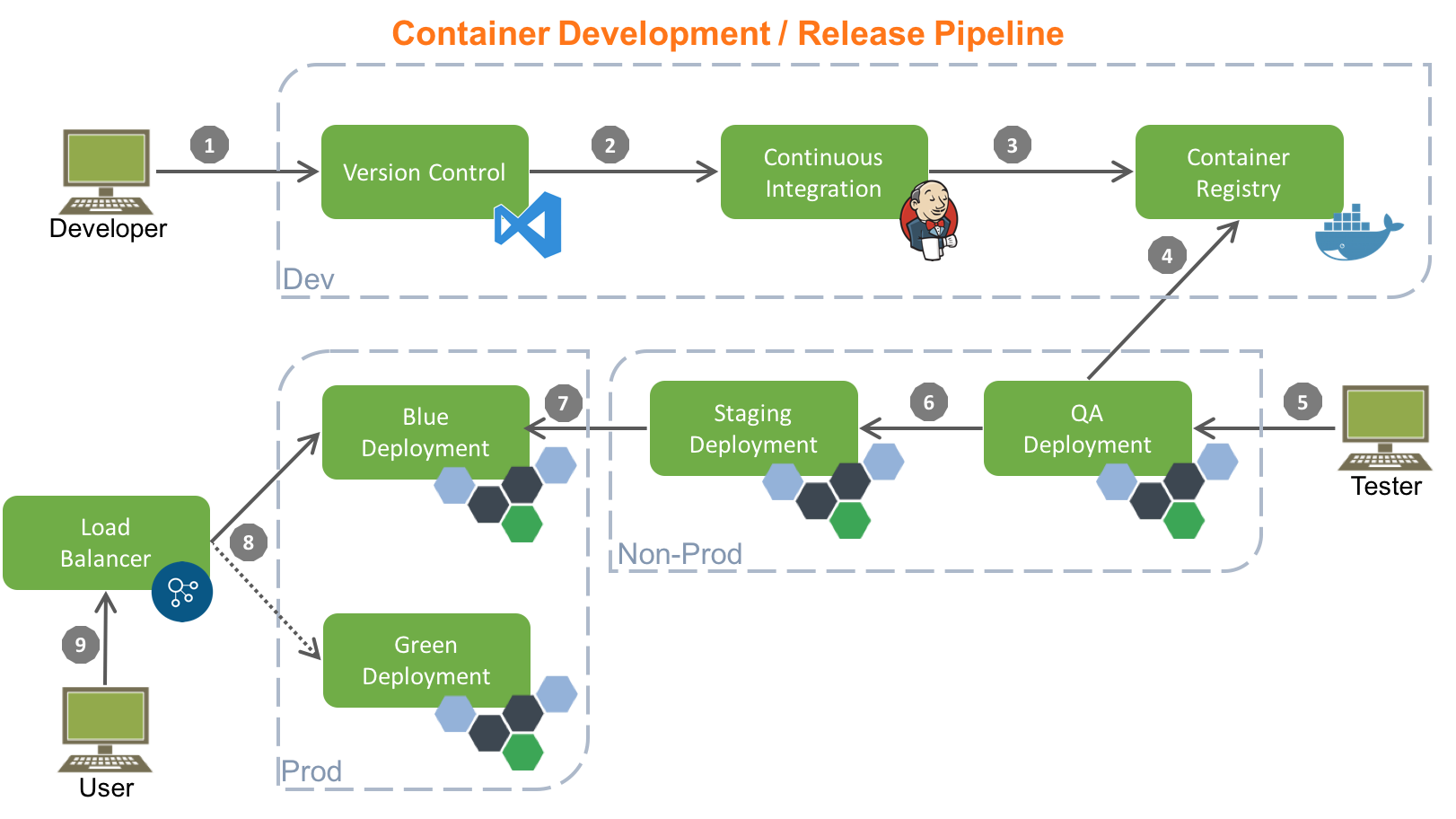

CI/CD is a group of techniques that enable regular, reliable, and computerized software releases. With regard to AI projects, this specific involves integrating computer code changes (Continuous Integration) and deploying versions (Continuous Deployment) effectively and reliably. AJE pipelines are more complex than conventional software pipelines thanks to additional phases like data running, model training, plus evaluation.

Guidelines intended for CI/CD in AI Projects

1. Modular and Scalable Pipelines

AI projects frequently involve various elements such as info processing, model teaching, and evaluation. Designing modular CI/CD sewerlines that separate these kinds of components can increase scalability and maintainability. For instance, having distinct stages for data preprocessing, feature engineering, model teaching, and testing enables teams to upgrade or scale personal components without affecting the entire pipeline.

Greatest Practice:

Use microservices architecture where achievable, with separate pipelines for data running, model training, and deployment.

my response for datasets and models in order to ensure reproducibility.

2. Automated Testing and even Validation

Automated tests is critical in ensuring that computer code changes do certainly not break the present features of AI methods. However, AI methods require specialized testing, such as overall performance evaluation, model reliability, and data sincerity checks.

Best Practice:

Implement unit assessments for data control and model teaching scripts.

Use validation metrics and overall performance benchmarks to gauge model quality.

Incorporate automated tests for information integrity and uniformity.

3. Continuous Checking and Opinions

Ongoing monitoring is vital for AI systems to be able to ensure models succeed in production. This can include tracking model efficiency, detecting anomalies, and gathering feedback coming from real-world usage.

Ideal Practice:

Set upward monitoring systems in order to track model functionality metrics like precision, precision, recall, and even latency.

Use comments loops to continuously retrain and improve models based upon real-life data and satisfaction.

four. Data Management and Versioning

Effective files management is essential in AI projects. Dealing with large datasets, ensuring data quality, plus managing data variations are key problems that can influence model performance in addition to pipeline efficiency.

Greatest Practice:

Implement info versioning tools in order to changes in datasets and ensure reproducibility.

Employ data management platforms that support large-scale data processing and even integration with CI/CD pipelines.

5. System as Code (IaC)

Using Infrastructure since Code (IaC) tools helps automate typically the setup and management of computing solutions necessary for AI assignments. This ensures of which environments are steady and reproducible throughout different stages associated with development and application.

Best Practice:

Use IaC tools just like Terraform or AWS CloudFormation to handle facilities resources for instance calculate instances, storage, plus networking.

Define surroundings (e. g., development, staging, production) throughout code to make sure regularity and simplicity of deployment.

6. Security and Compliance

Security and compliance are crucial aspects of CI/CD pipelines, especially throughout AI projects coping with sensitive or governed data. Ensuring safe access, data protection, and compliance with regulations is essential.

Best Practice:

Put into action role-based access settings and secure authentication methods.

Use security for data at rest and inside transit.

Ensure compliance with relevant regulations (e. g., GDPR, HIPAA) and execute regular security audits.

Tools for Enhancing CI/CD Pipelines in AI Projects

A number of tools and platforms can be found to assist optimize CI/CD pipelines for AI projects. Here are some notable ones:

a single. Jenkins

Jenkins is a traditionally used open-source CI/CD tool that will supports a range of plug ins for building, deploying, and automating AI workflows. It offers versatility and extensibility, producing it suitable with regard to complex AI sewerlines.

Features:

Extensive wordpress plugin ecosystem

Customizable pipelines

Integration with different version control devices and deployment resources

2. GitLab CI/CD

GitLab CI/CD presents a comprehensive selection of tools intended for managing the whole software development lifecycle, including AI tasks. Its built-in CI/CD capabilities streamline the particular process from signal integration to application.

Features:

Integrated edition control and CI/CD

Built-in Docker pot support

Automated screening and deployment

a few. TensorFlow Extended (TFX)

TensorFlow Extended (TFX) is an end-to-end platform for taking care of machine learning work flow, including data preprocessing, model training, and even deployment. TFX combines with CI/CD tools to facilitate automated machine learning pipelines.

Features:

Components for data validation, change, and model serving

Integration with TensorFlow and other ML frames

Support with regard to scalable, production-ready pipelines

4. MLflow

MLflow is surely an open-source system for managing typically the ML lifecycle, which includes experimentation, reproducibility, and even deployment. It offers tools for monitoring experiments, packaging computer code, and sharing results.

Features:

Experiment monitoring and versioning

Type packaging and application

Integration with various MILLILITERS frameworks and surroundings

5. Kubernetes

Kubernetes is a textbox orchestration platform that can manage and size AI workloads. It automates the application, scaling, and functioning of containerized programs, including AI designs.

Features:

Automated pot management and running

Integration with CI/CD pipelines

Support for various AI frames and tools

Bottom line

Optimizing CI/CD sewerlines for AI jobs involves adopting best practices and leveraging the best tools to address the initial challenges associated with AI development. By simply focusing on modularity, motorisation, monitoring, and data management, and utilizing tools like Jenkins, GitLab CI/CD, TFX, MLflow, and Kubernetes, teams can improve their workflows and even enhance the efficiency and even reliability of their AI deployments. Because AI continue to be evolve, maintaining an flexible and optimized CI/CD pipeline is going to be crucial to staying competing and delivering modern solutions